Introduction

Artificial intelligence is everywhere—helping doctors diagnose illnesses, guiding financial decisions, and even ensuring cars navigate the road safely. Yet, with great power comes even greater responsibility. If people can’t trust AI technologies to perform reliably and ethically, their adoption could falter. Enter the Keeper Standards Test, a framework designed to ensure AI systems earn and maintain trust.

This blog explores how it works, why it’s vital, and the ways it builds confidence in AI technologies. Let’s dive into how rigorous testing can transform skepticism into trust.

Understanding the Keeper Standards Test

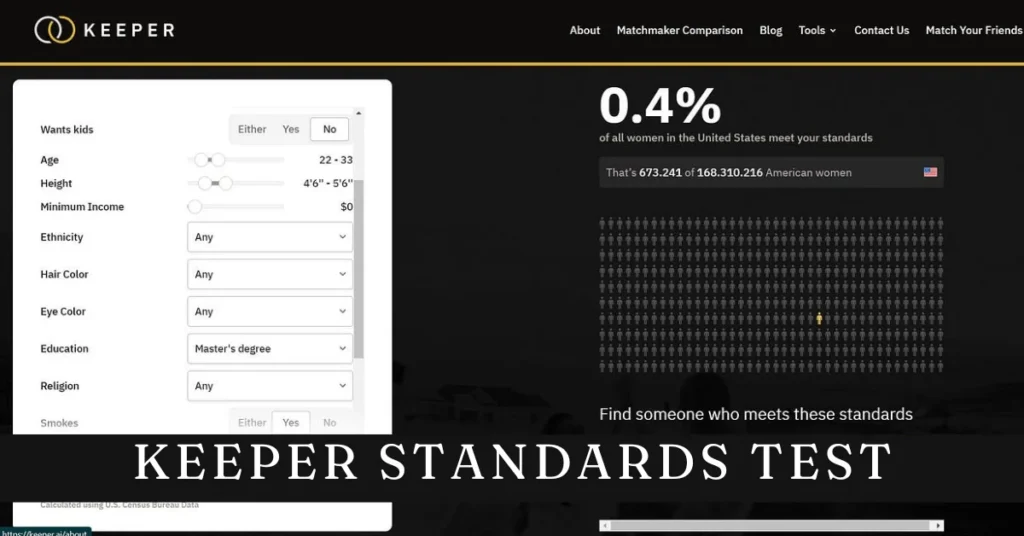

What Is the Keeper Standards Test?

The Keeper Standards Test isn’t just a checklist; it’s a comprehensive framework designed to evaluate AI systems against critical criteria. Think of it as a guardian, ensuring AI technologies meet high standards for reliability, accuracy, and fairness. The test doesn’t just ask, “Does this AI work?” It goes further, questioning “Does it work well, ethically, and consistently?”

By setting clear evaluation goals, the test provides both developers and users with confidence that an AI system is ready for the real world.

A Brief History of the Test

It emerged in response to growing concerns about AI reliability and bias. In its early days, AI was like the Wild West—exciting, but chaotic. Developers raced to create groundbreaking systems, often leaving questions of trust as an afterthought. Recognizing this gap, researchers and industry leaders collaborated to create a structured approach for evaluating AI.

Now, the Keeper Standards Test stands as a cornerstone in the AI industry, shaping how organizations build and assess their technologies.

The Importance of Trust in AI Technologies

Why Trust Is Essential

Trust is the glue that holds innovation together. Without it, even the most advanced AI will fail. Imagine a self driving car that can’t be trusted to make good decisions or a healthcare AI that provides inconsistent diagnoses. People won’t use technology they can’t rely on.

Industries like finance, transport and healthcare where precision and accountability matter most rely on trust. It ensures AI’s meet those high standards so they can be adopted seamlessly.

The Dangers of Distrust

When there’s no trust in AI the consequences are dire. A financial AI tool that mismanages data and loses millions or a biased recruitment AI that discriminates against candidates. These failures harm users and erode public trust in AI overall.

The Keeper Standards Test prevents these scenarios by catching the cracks during testing. Better to fix the foundation than watch the whole thing come crashing down.

The Keeper Standards Test

Clear Expectations

The first step to building trust is to set expectations. The Keeper Standards Test sets clear goals for what an AI system should do. Whether it’s accurate predictions, ethical decision making or fast response times the test provides measurable targets so the AI is fit for purpose.

Real World Scenario Testing

AI doesn’t exist in a vacuum – it’s in complex, unpredictable environments. That’s why the Keeper Standards Test includes real world scenarios in the testing process. By simulating real world conditions the test ensures AI systems perform under pressure.

Metrics

Numbers don’t lie. The Keeper Standards Test uses metrics like accuracy rates, response times and error margins to measure an AI’s performance. These tangible results prove an AI’s capabilities and build trust with users and stakeholders.

Building Reliability through Testing

Stress Testing and Adaptability Checks

Life is unpredictable and AI systems need to adapt to the unexpected. It puts technologies through stress testing to see how they perform under pressure and adaptability checks to see how well they learn from new data or adjust to changing circumstances.

Ethical Audits

Bias in AI isn’t just a technical bug – it’s a trust killer. An AI that unfairly favours one group over another can cause harm and outrage. Ethical audits are part of the Keeper Standards Test to identify and mitigate biases so it’s fair for everyone not just the few.

Building User Trust with Transparent Processes

Transparency in Testing

Transparency is trust’s best friend. It is clear about the testing process and results. When users know how an AI was tested they’ll trust its capabilities. Transparency turns a black box into a open book.

Iterative Improvement

No system is perfect on the first try. The Keeper Standards Test embraces iterative improvement, encouraging developers to refine their AI based on test results. This commitment to continuous improvement demonstrates accountability and dedication to delivering the best possible technology.

Real-Life Applications and Case Studies

Success Stories in AI

Many industries have already reaped the benefits of the Keeper Standards Test. In healthcare, for instance, an AI-powered diagnostic tool passed rigorous Keeper Standards evaluations, earning the trust of doctors and patients alike. Similarly, a transportation company implemented the test to validate its self-driving technology, leading to safer and more reliable vehicles on the road.

Lessons from Failures

Unfortunately, not every AI system has prioritized rigorous testing. One notable example involved a recruitment AI that displayed significant bias in hiring decisions due to incomplete testing. This failure underscored the importance of the Keeper Standards Test in identifying flaws before systems are deployed.

Future Implications for Trust in AI

Advancements in Technology

As AI continues to evolve, so too will the Keeper Standards Test. Emerging technologies like machine learning and big data are expected to play a significant role in refining evaluation processes. The test will adapt to ensure it stays ahead of the curve, maintaining its relevance in an ever-changing tech landscape.

Regulatory Frameworks

Governments and regulatory bodies are beginning to recognize the importance of standardized AI testing. It could serve as a model for future regulations, creating a unified approach to evaluating and certifying AI systems. This added layer of oversight would further enhance public trust.

Conclusion

Trust is the foundation upon which successful AI technologies are built. The Keeper Standards Test ensures that foundation is strong by evaluating systems for reliability, ethics, and adaptability. Its rigorous approach not only identifies potential issues but also inspires confidence among users, developers, and regulators.

As AI becomes an integral part of our lives, frameworks like the Keeper Standards Test will play a pivotal role in shaping its future. By prioritizing testing and transparency, we can unlock the full potential of AI technologies while keeping trust at the forefront. For developers and stakeholders, the message is clear: test early, test often, and test with purpose.